In 2017, Classical KUSC began searching for new opportunities to inspire youths to engage with classical music. Moodles is a first-of-its-kind musical instrument app for children and teenagers to compose music without the banality of classes. The goal is to introduce music composition in a fun way that does not require lessons in music theory. The last thing the team wanted to do was build an iPad piano app so that your mom could tell you to practice!

Learn more about how the project concept, brand, and product development, visit Skyground Media's Case Study.

This article is a deep dive / technical analysis of how the audio and visual components integrated real-time sharing.

Development

Developing Custom Animations

From the onset, the team wanted to provide custom animations for the music application. We worked with Swedish designer Karolin Gu to create a suite of new animations using Adobe After effects.

From there, we worked with an After Effects Plug-in called Squall to export the animation into Apple Swift.

Moodles Animations

Developing Custom Sounds

We hired sound engineer Tim Yang to create a custom sound library to match Karolin's animations. Tim is brilliant and produced a sound palette in a few days allowing the engineers to learn how to package them into a SoundFont. SoundFont is a brand name consisting of a file format and a technology that uses sample-based synthesis to play MIDI files.

Polyphone Soundfont enabled us to package Tim's WAV files into a single "sf2 "library. If you want to hear the sounds, you can download the sounds, royalty-free, from the Internet Archive.

Working with MIDI

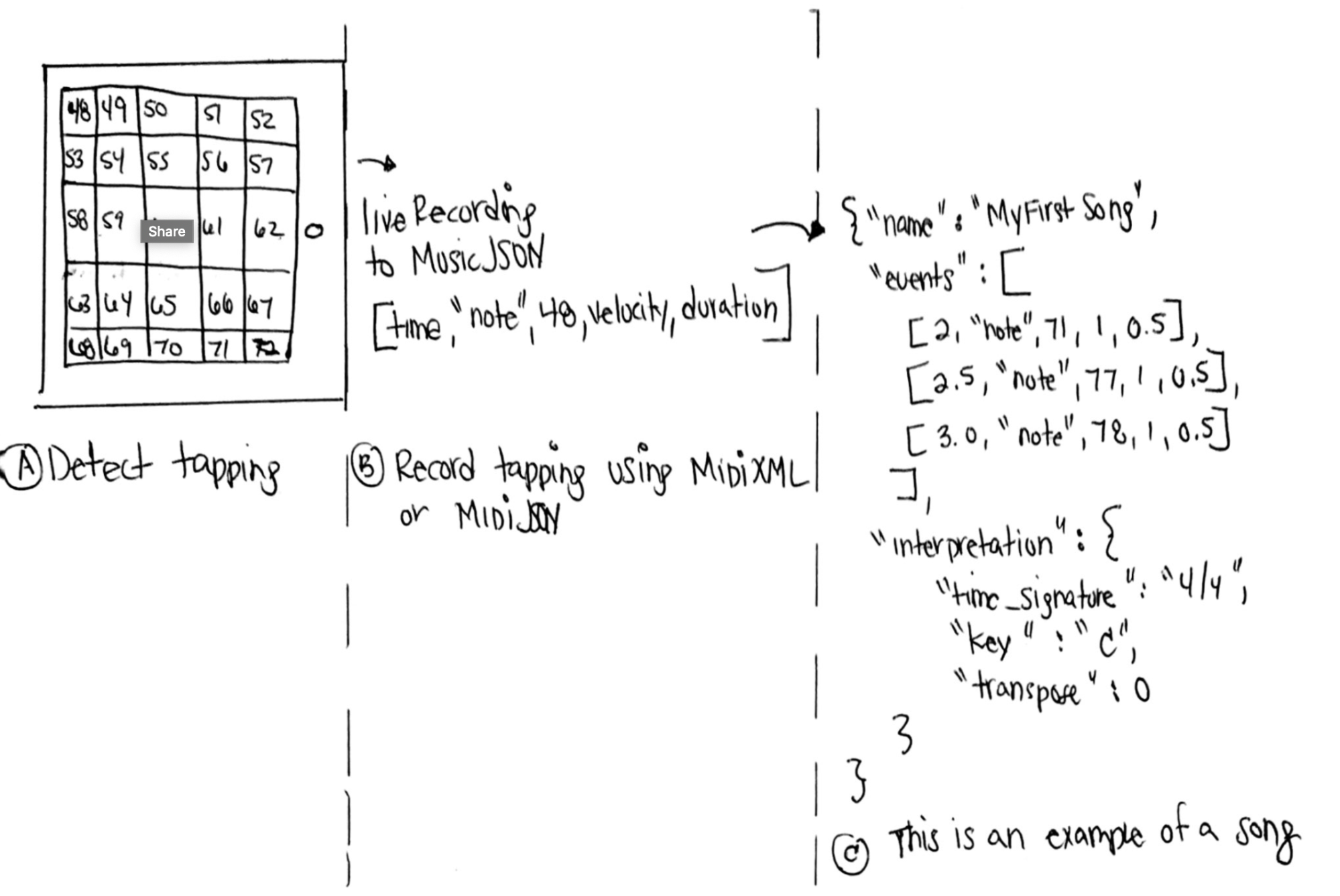

AudioKit Pro makes it easy to build iOS applications that use MIDI and Apple's CoreAudio. Therefore, our first step towards building an instrument app was to map musical notes to our MIDI piano. The diagram below shows how the team planned each note to MIDI JSON.

Here is a sample of the Swift code. The class makes it possible to bridge the musical notes (i.e., pitch and rhythm) a user creates when tapping on a screen to the MIDI that we can later record, playback, and store a composition into the cloud.

import Foundation

//Structure related to Music JSON format

enum MIDIEventType: String {

case note = "note"

case param = "param"

case control = "control"

case pitch = "pitch"

case chord = "chord"

case sequence = "sequence"

}

protocol MIDIComposition {

associatedtype TNoteEvent: MIDINoteEvent

var noteEvents:[TNoteEvent] { get }

var length: Double { get }

var tempo: Double { get }

}

protocol MIDIEvent {

var time: Double { get } // describe time in beats

var type: String? { get } // one of MIDIEventType

}

protocol MIDINoteEvent: MIDIEvent {

var duration: Double { get } // describe time in beats

var number: Int16 { get } // INT [0-127], represents the pitch of a note

var velocity: Int16 { get } // From 0-127

}

Bridging Music + JSON + Swift

We were inspired by the work of Andrew Madsen who showed us a way to bridge Music + JSON + Objective-C.

Once we were able to bridge music and JSON and provide a systematic way to record and playback any song, we then began developing a musical quiz.

MIKMIDINoteEvent

MIKMIDINoteEvent(timeStamp: 0, note: 48, velocity: 127, duration: 0.5, channel: 0)

JSON

[3, "note", 50, 127, 0.5]

Bridging these two concepts enabled us to record when a user taps the [UICollectionViewCell](uicollectionview swift) and covert it to JSON for data storage.

{

"name": "My First Song",

"events": [

//User Tap #1

[2, "note", 48, 127, 0.5],

// User Tap #2

[2.5, "note", 49, 127, 0.5],

// timeStamp, note, velocity, duration

[3, "note", 50, 127, 0.5],

[3.5, "note", 51, 127, 3.5],

[10, "note", 52, 127, 0.5]

]

}

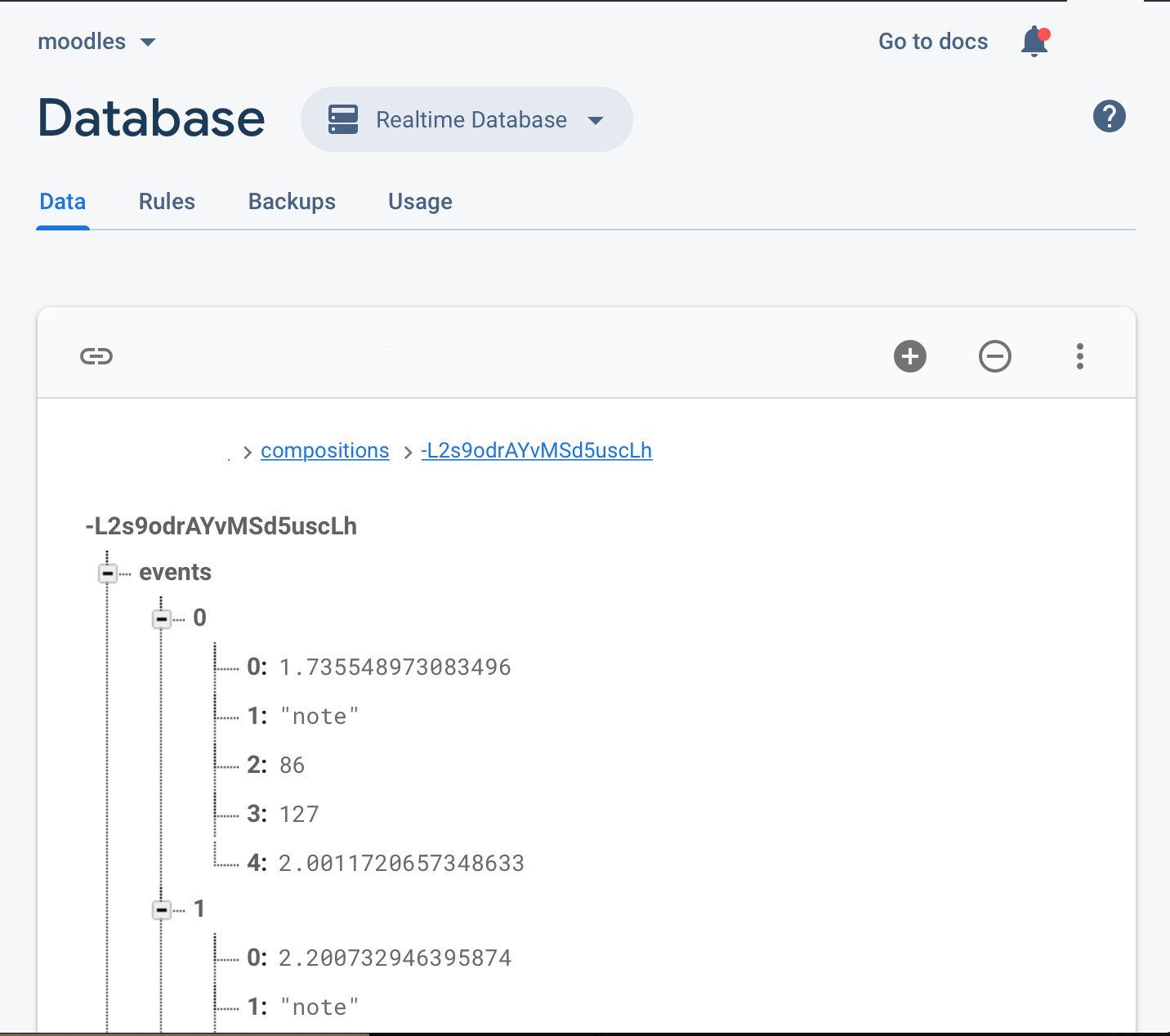

Real Example

Here is an example of a user-created composition:

{

"length": 33.733943939208984,

"name": "Mary",

"tempo": 60,

"events": [

[1.735548973083496, "note", 86, 127, 2.0011720657348633],

[2.200732946395874, "note", 82, 127, 2.0007500648498535],

[2.666996955871582, "note", 80, 127, 1.5799760818481445],

[3.134163022041321, "note", 78, 127, 2.001255989074707],

[4.6933770179748535, "note", 84, 127, 0.4515969753265381],

[3.976855993270874, "note", 74, 127, 2.0013020038604736],

[4.24698793888092, "note", 80, 127, 2.0012450218200684],

[4.441772937774658, "note", 79, 127, 2.0023610591888428],

[4.9407700300216675, "note", 83, 127, 2.0013009309768677],

[5.144986987113953, "note", 84, 127, 2.0023430585861206],

[5.47352397441864, "note", 86, 127, 2.001214027404785],

[7.661324977874756, "note", 84, 127, 1.3822569847106934],

[7.083531022071838, "note", 82, 127, 2.0057259798049927],

[7.427384972572327, "note", 78, 127, 2.0109879970550537],

[7.8482489585876465, "note", 74, 127, 2.0053189992904663],

[8.159757018089294, "note", 80, 127, 2.008544921875],

[8.585530042648315, "note", 83, 127, 2.001150965690613],

[9.043583035469055, "note", 84, 127, 2.006042003631592],

[9.545789003372192, "note", 87, 127, 2.0242420434951782],

[10.068104982376099, "note", 86, 127, 2.001162052154541],

[11.023968935012817, "note", 90, 127, 2.0013060569763184],

[12.052382946014404, "note", 91, 127, 2.03545606136322],

[13.504536986351013, "note", 85, 127, 2.000174045562744],

[13.735672950744629, "note", 88, 127, 2.0012930631637573],

[13.969988942146301, "note", 89, 127, 2.001507043838501],

[14.230641961097717, "note", 84, 127, 2.000688076019287],

[14.501525044441223, "note", 82, 127, 2.0002689361572266],

[16.187057971954346, "note", 85, 127, 0.9669140577316284],

[17.41103994846344, "note", 88, 127, 1.2865140438079834],

[17.153972029685974, "note", 85, 127, 2.0012149810791016],

[17.669700980186462, "note", 89, 127, 2.032960057258606],

[17.962610006332397, "note", 84, 127, 2.023123025894165],

[18.2659410238266, "note", 86, 127, 2.0036189556121826],

[18.69755494594574, "note", 88, 127, 2.0013450384140015],

[18.985236048698425, "note", 82, 127, 2.0052649974823],

[19.462666034698486, "note", 80, 127, 2.029099941253662],

[19.757699966430664, "note", 81, 127, 2.0025429725646973],

[20.59239101409912, "note", 90, 127, 2.000859022140503],

[21.264801025390625, "note", 89, 127, 2.0012810230255127],

[22.036635994911194, "note", 93, 127, 2.00110399723053],

[23.480831027030945, "note", 95, 127, 1.3238129615783691],

[24.80464494228363, "note", 95, 127, 2.005560040473938],

[26.1202529668808, "note", 94, 127, 1.3467869758605957],

[27.467041015625, "note", 94, 127, 2.0007799863815308],

[28.701019048690796, "note", 78, 127, 2.0011409521102905],

[29.587227940559387, "note", 72, 127, 2.001275062561035],

[30.413745045661926, "note", 73, 127, 2.0694299936294556],

[31.169628024101257, "note", 74, 127, 2.016849994659424],

[31.938958048820496, "note", 72, 127, 1.7940999269485474],

[32.785423040390015, "note", 91, 127, 0.9476529359817505],

[32.18381094932556, "note", 77, 127, 1.549280047416687],

[32.37651801109314, "note", 81, 127, 1.3565880060195923],

[32.48401200771332, "note", 82, 127, 1.2491090297698975],

[32.115553975105286, "note", 76, 127, 1.6175800561904907]

]

}

Making of a Musical Quiz

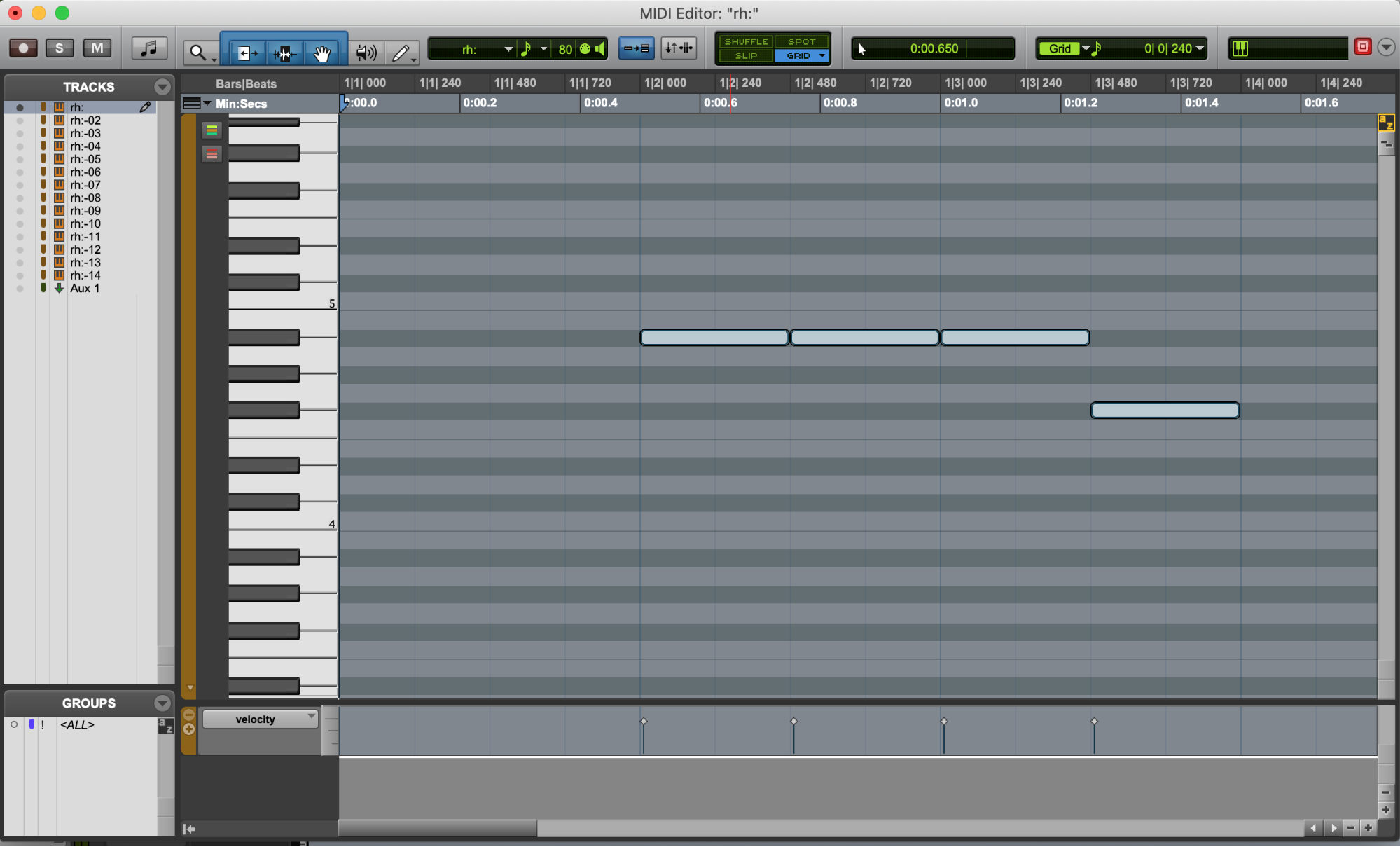

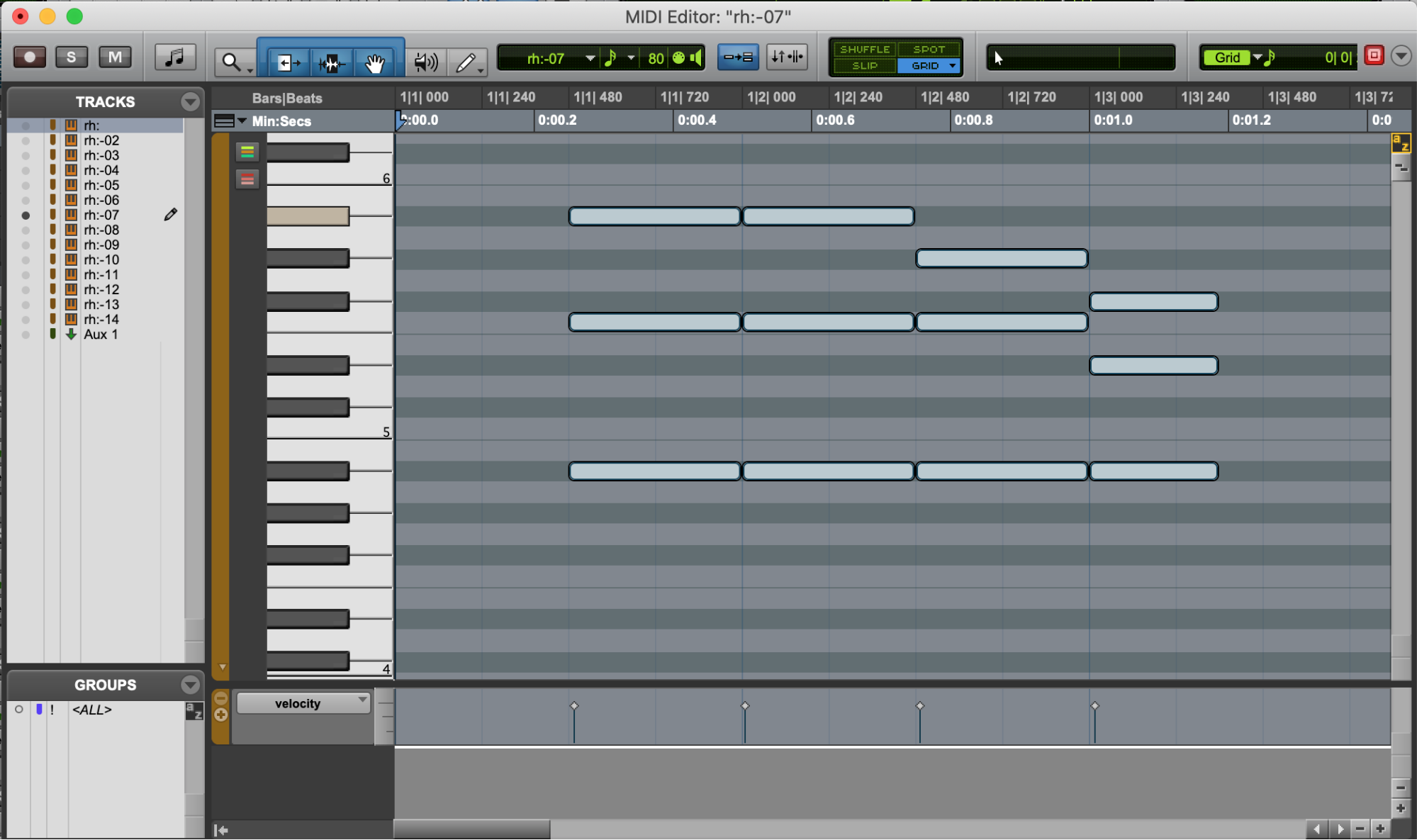

To develop a game of Simon, the team developed a process for generating MIDI files and converting them into MIDI JSON. Below is an example of the MIDI files. The compositions were edited in Pro Tools and then exported.

The quiz consists of Beethoven's 5th symphony chopped up into 14 sections, which we later structured into rounds.

Round #1 is very recognizable. The screenshot shows the start of Beethoven's famous "da da da dum."

This example shows what it’s like for a user to tap three notes at the same time.

With the MIDI files imported into the iOS app, the data is next parsed for MIDI playback. The class below shows a sample of what we had to do to parse a MIDI file into JSON dynamically.

import Foundation

import AudioKit

import MagicalRecord

import PromiseKit

func parse(fileUrl: URL) -> Promise<MDComposition> {

return Promise(resolvers: {fullfill, reject in

let name = fileUrl.lastPathComponent.replacingOccurrences(of: "." + fileUrl.pathExtension, with: "")

var length: Double = 0

var tempo: Double = 0

var events = [MDNoteEvent]()

AKSequencer(urlPath: fileUrl as NSURL).tracks.forEach { track in

let trackLength = Double(track.length)

if trackLength > length {

length = trackLength

}

var iterator: MusicEventIterator? = nil

NewMusicEventIterator(track.internalMusicTrack!, &iterator)

var eventTime = MusicTimeStamp(0)

var eventType = MusicEventType()

var eventData: UnsafeRawPointer? = nil

var eventDataSize: UInt32 = 0

var hasNextEvent: DarwinBoolean = false

MusicEventIteratorHasCurrentEvent(iterator!, &hasNextEvent)

while(hasNextEvent).boolValue {

MusicEventIteratorGetEventInfo(iterator!, &eventTime, &eventType, &eventData, &eventDataSize)

if kMusicEventType_MIDINoteMessage == eventType {

let noteMessage: MIDINoteMessage = (eventData?.bindMemory(to: MIDINoteMessage.self,

capacity: 1).pointee)!

let noteEvent = MDNoteEvent(time: eventTime,

number: Int16(noteMessage.note),

duration: Double(noteMessage.duration),

velocity: Int16(noteMessage.velocity))

events.append(noteEvent)

}

if kMusicEventType_Meta == eventType {

var meta: MIDIMetaEvent = (eventData?.load(as: MIDIMetaEvent.self))!

if meta.metaEventType == 0x01 {

var dataArr = [UInt8]()

withUnsafeMutablePointer(to: &meta.data, { ptr in

for i in 0 ..< Int(meta.dataLength) {

dataArr.append(ptr[i])

}

})

let oldString = NSString(bytes: dataArr, length: dataArr.count,

encoding: String.Encoding.utf8.rawValue)

if let string = oldString as? String,

let beats = Double(string) {

tempo = beats

}

}

}

MusicEventIteratorNextEvent(iterator!)

MusicEventIteratorHasCurrentEvent(iterator!, &hasNextEvent)

}

}

let composition = MDComposition(noteEvents: events,

length: length,

tempo: tempo,

name: name,

id: "")

fullfill(composition)

})

}

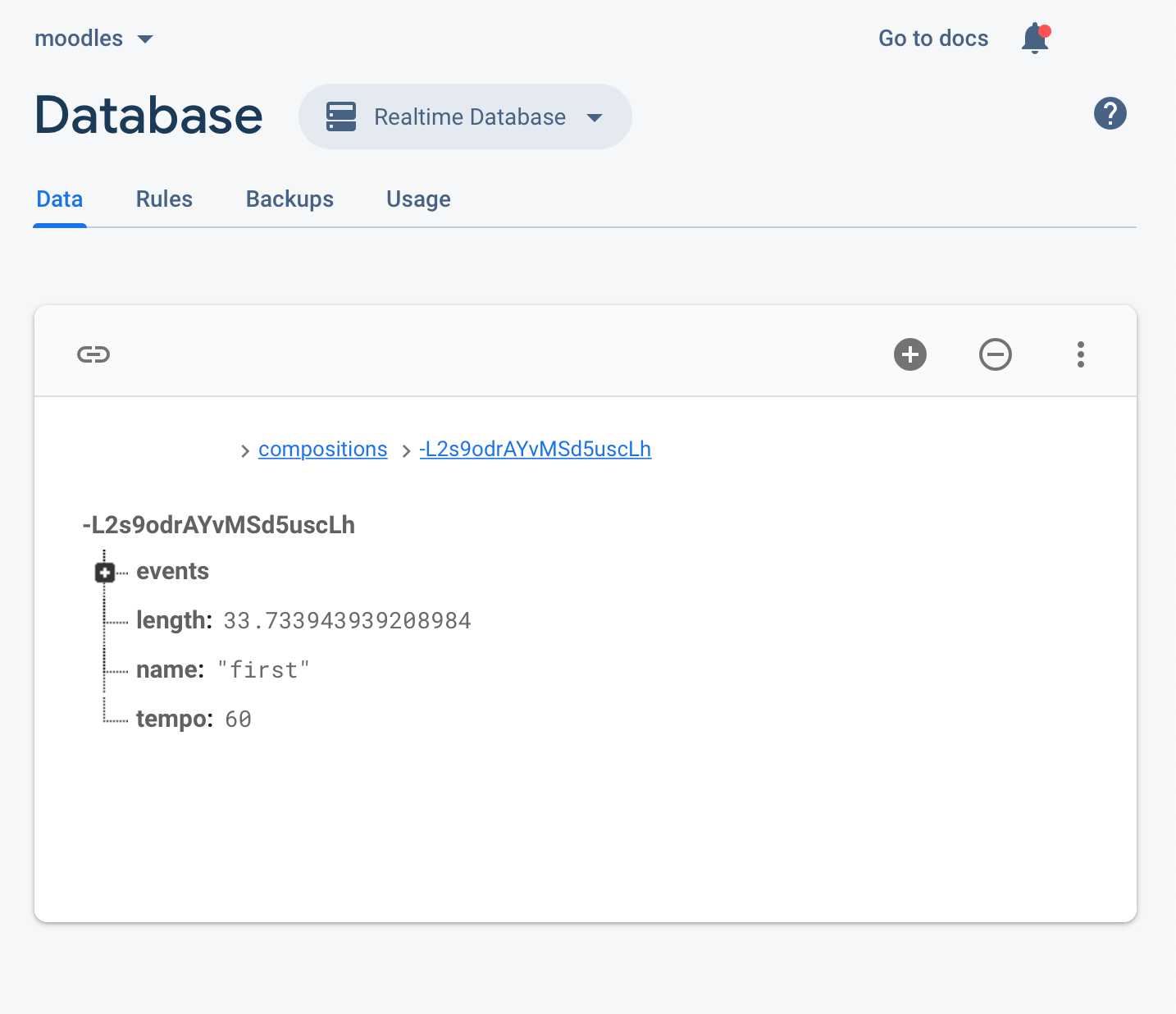

Storing User Compositions into the Cloud

We used Firebase as a real-time data store for recording user compositions. Converting a user's tap into MIDI JSON enabled us to publish the JSON file to Firebase for both storage and composition sharing. This technique ensured latency would remain below 300ms, and file sizes would never prove an issue.