Here at USC Radio Group, the new media team is currently working on a redesign of Classical KUSC and Classical KDFC. Both websites now live on different content management systems, and one of our digital goals is to migrate them to Wordpress. We like Wordpress; the plug-ins are plentiful, the community is strong, and there's no shortage of answers on StackOverflow.

During the planning stages of a redesign, one of the first things any team should do is conduct a content audit. Content audits are good. They help managers understand the scope of the project and give programming a chance to reflect on the content. There are many tools we can use to create a content audit, including a CSV export, a PHP MyAdmin export, a MySQL Dump, or even a hand-crafted Google Spreadsheet. Still, an even better tool is Crawly.

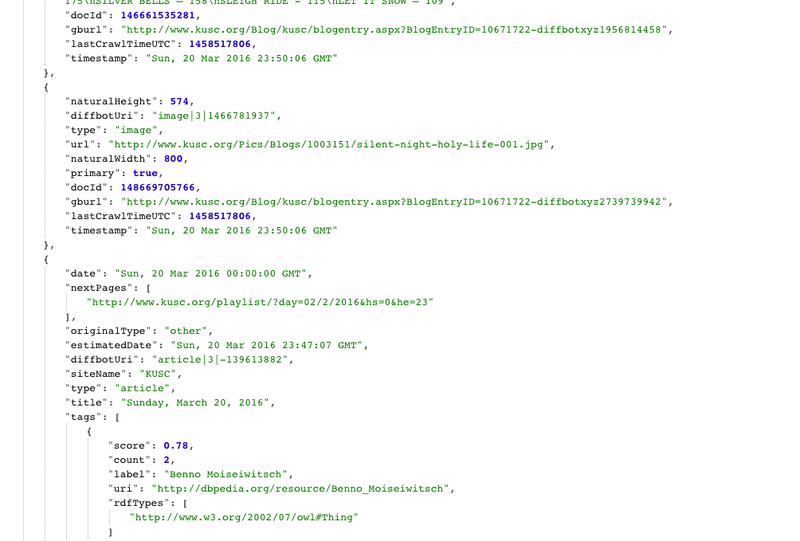

Crawly is brilliant because it both scrapes your data and presents it in a beautifully packaged JSON (or CSV) format. For a software developer, JSON is the best format to package data, and Crawly does in a matter of seconds.

Resources

If you're looking for other web scraping tools, check out this list